The Future Product Days (FPD 2025) conference in Copenhagen addressed the "big almost existential challenges" facing product teams, designers, and leaders. While different people had different opinions, one thing they could all agree on was that "things are changing".

Thought Leaders at the conference proposed a fundamental shift in focus, structure, and culture to build great products in this high-stakes environment where digital products are seen as the basic building blocks of modern life.

Why: Build Products That Solve Real (User) Problems

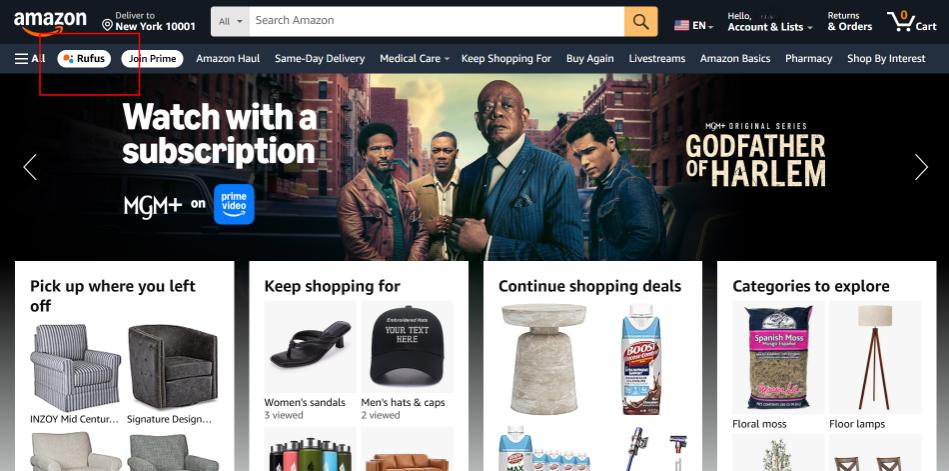

One of the key challenges in the age of AI is ensuring new technology provides meaningful help and is adopted by users, fighting the instinct to pursue "tech for tech sake", or how Kate Moran put it: “AI Is Not a Value Proposition”. Teams must avoid making things more complex with technology. One example highlighted was Amazon’s AI assistant “Rufus”:

Resulting in more questions than answers:

- Who the f*ck is Rufus?

- And why is it positioned next to the categories

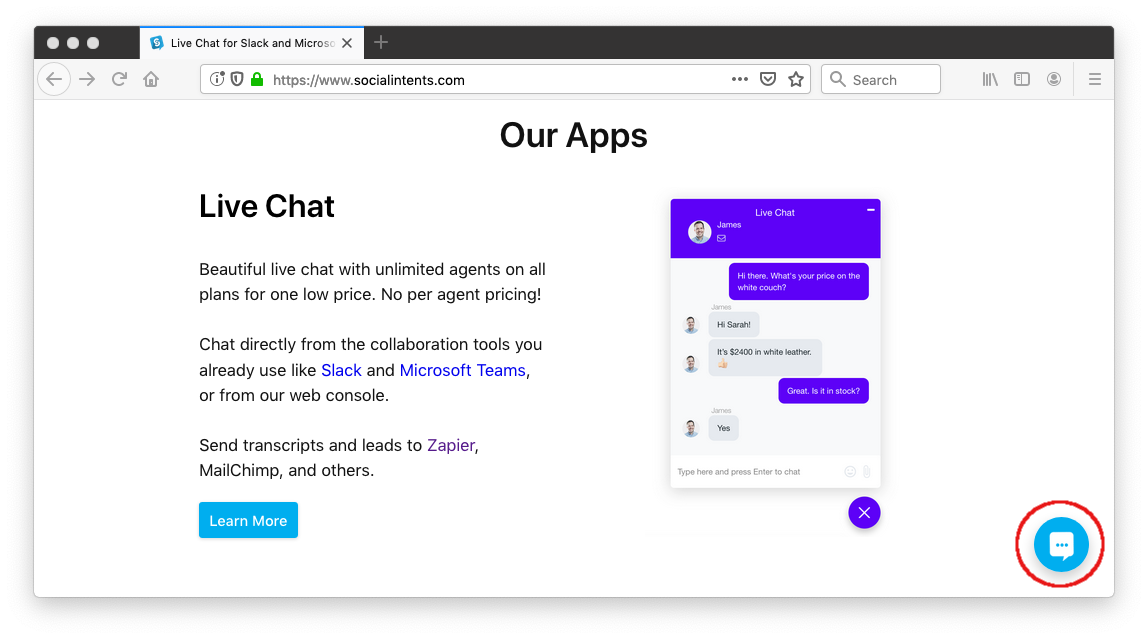

- If only there would be a place in the UI that has established itself as an entry point for chats 😉

source

source

The focus must be on solving real problems for the users. But once it solves a real problem, users still need to be aware of it and able to use it. Unfortunately, even valuable AI features often fail to stick due to the following adoption challenges, categorized by Nielsen Norman Group research into three main reasons:

-

No Real User Value: The feature looks cool but does not genuinely help solve a problem, leading to its quiet retirement, as seen with LinkedIn's AI-generated follow-up questions feature.

-

Execution Failure (Discoverability): Features are hidden, given non-descriptive names (like "Rufus" in the Amazon example), or their implementation breaks standard user expectations and mental models. This leads to a "massive design failure" where users have to seek external instructions (e.g., on TikTok or Reddit) to find and use the feature.

-

Lack of Usability: Users, conditioned by years of search engines, often treat conversational AI like a "slightly smarter search box," throwing in short phrases instead of the detailed context the tool needs, resulting in bad outcomes and frustration.